[ad_1]

one. Challenges for the Modern Blockchain Data Stack

There are various issues a present day blockchain indexing startup can encounter, which includes:

- Large sum of information. As the sum of information on the blockchain increases, the information index will want to scale to deal with the elevated payload and present effective information accessibility. As a end result, it prospects to greater storage expenses, slow metric computation, and elevated load on the database server.

- Complex information processing pipelines. Blockchain technologies is complicated, and constructing a in depth and trustworthy information index involves a deep knowing of the underlying algorithms and information structures. The wide range of blockchain implementations that inherit it. Given precise examples, NFTs in Ethereum are ordinarily designed in wise contracts in the ERC721 and ERC1155 formats. In contrast, implementations of matters on Polkadot, for illustration, are frequently created straight into the blockchain runtime. Those should really be handled as NFTs and should really be saved as that.

- Integration abilities. To present highest worth to customers, a blockchain indexing option may possibly want to integrate its information index with other techniques, this kind of as an analytics platform or API. This is tough and involves substantial hard work in architectural style and design.

As blockchain technologies has turn into additional well-known, the sum of information stored on the blockchain has elevated. This is simply because numerous men and women are working with this technologies and every transaction adds new information to the blockchain. In addition, blockchain technologies has evolved from uncomplicated remittance applications, this kind of as people involving the use of Bitcoin, to additional complicated applications involving the implementation of business enterprise logic in wise contracts. These wise contracts can produce significant quantities of information, which increases the complexity and dimension of the blockchain. Over time, this has resulted in a greater and additional complicated blockchain.

In this report, we search at the evolution of Footprint Analytics’ technologies architecture in phases as a situation review to examine how the Iceberg-Trino technologies stack addresses information issues. information on the string.

Footprint Analytics has indexed somewhere around 22 public blockchain information and 17 NFT markets, the 1900 GameFi undertaking, and in excess of a hundred,000 NFT collections into one particular semantic abstraction information layer. It’s the most in depth blockchain information warehouse option in the planet.

Regardless of blockchain information, which includes additional than twenty billion rows of fiscal transaction data, which information analysts frequently query. it is diverse from the intrusion log in a conventional information warehouse.

We have undergone three big upgrades in the previous couple of months to meet increasing business enterprise demands:

two. Architecture one. Big Query

When we started out Footprint Analytics, we employed Big Google Query like our query and storage engine Bigquery is a terrific solution. It’s amazingly speedy, quick to use, and delivers dynamic arithmetic energy and versatile UDF syntax that aids us get matters finished swiftly.

However, Bigquery also has some challenges.

- Uncompressed information prospects to higher expenses, in particular when storing the raw information of Footprint Analytics’ 22+ blockchains.

- Concurrency is not adequate: Bigquery only supports a hundred concurrent queries, which is not appropriate for higher concurrency situations for Footprint Analytics when serving various analysts and customers.

- Lock with Google Bigquery, a closed supply product。

So we determined to examine other option architectures.

three. Architecture two. OLAP

We are extremely interested in some of the OLAP goods that have turn into extremely well-known. The most interesting benefit of OLAP is the query response time, which normally requires a couple of seconds to return query success for big quantities of information, and it can also help 1000’s of concurrent queries.

We have picked one particular of the ideal OLAP databases, Doris, to give it a test. This engine performs fine. However, at some stage, we quickly ran into some other challenges:

- Data kinds like Array or JSON are not still supported (November 2022). Arrays are a popular information style in some blockchains. For illustration, the subject field in the evm log. Not staying in a position to compute on Array straight has an effect on our skill to compute various business enterprise metrics.

- Limited help for DBT and for merge statements. These are popular requests for information engineers for ETL/ELT situations when we want to update some newly indexed information.

With that explained, we could not use Doris for our complete information manufacturing workflow, so we attempted to use Doris as an OLAP database to partially remedy our issue in information manufacturing method, which acts as a swift and really effective query and provisioning engine. concurrent query abilities.

Unfortunately, we are unable to change Bigquery with Doris, so we have to periodically synchronize information from Bigquery to Doris working with it as a query engine. This synchronization has some challenges, one particular of which is that the update publish method will get piled up swiftly when the OLAP engine is active serving queries to front-finish clientele. After that, the pace of the creating method suffers and the synchronization requires longer and often even are unable to be finished.

We recognized that OLAP could remedy some of the challenges we had been acquiring and could not be a Footprint Analytics turnkey option, in particular for information processing. Our issue is larger and additional complicated and we can say OLAP as a query engine alone is not adequate for us.

four. Architecture three. Iceberg + Trino

Welcome to the Footprint Analytics three. Architecture, a comprehensive overhaul of the underlying architecture. We redesigned the complete architecture from the ground up to separate information storage, computation, and querying into 3 diverse elements. Draw lessons from the two prior Footprint Analytics architectures and discover from other prosperous massive information tasks like Uber, Netflix, and Databricks.

four.one. Introducing Data Lakes

We to start with turned our awareness to information lakes, a new style of information storage for the two structured and unstructured information. Data lakes are best for on-chain information storage as the formats of on-chain information selection from unstructured raw information to structured abstract information Footprint Analytics is popular for. We system to use the information lake to remedy the information storage issue, and ideally it will also help mainstream compute engines like Spark and Flink, so that integration with other kinds The diverse processing resources as Footprint Analytics grows should really not bring about challenges.

Iceberg integrates extremely very well with Spark, Flink, Trino, and other compute engines, and we can select the most suitable calculation for every of our metrics. Eg:

- For people who need complicated computational logic, Spark will be the option.

- Flink for authentic time calculation.

- For uncomplicated ETL duties that can be carried out working with SQL, we use Trino.

four.two. Query instrument

With Iceberg solving storage and compute challenges, we had to assume about deciding upon a query engine. Not numerous possibilities obtainable. The choices we look at are

The most vital factor we regarded in advance of diving deeper was that the potential query engine should really be compatible with our latest architecture.

- To help Bigquery as a DataSource

- To help DBT, we depend on it to make numerous indicators

- To help BI instrument metadata

Based on the over we chose Trino, the help instrument was extremely very good for Iceberg and the workforce was so responsive that we raised a bug, which was fixed the upcoming day and launched to the most current edition. Latest edition upcoming week. This is the ideal option for the Footprint workforce who also involves higher deployment responsiveness.

four.three. Performance testing

Once we determined on our course, we did a effectiveness check on the Trino + Iceberg combo to see if it met our desires and to our shock the queries really speedy.

Knowing that Presto + Hive was the worst comparison instrument in many years of all the hype about OLAP, the Trino + Iceberg blend wholly blew us away.

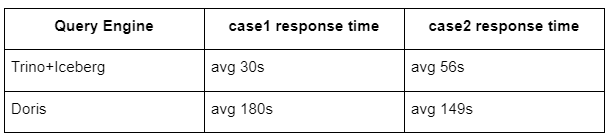

Here are the success of our exams.

situation one: join a significant information set

An 800 GB table1 combines with a further 50 GB table2 and performs complicated business enterprise calculations

context2: use a significant table to execute a separate query

sql check: pick distinguish(handle) from table group by date

The Trino+Iceberg blend is about three instances more rapidly than the Doris in the identical configuration.

In addition, there is a further shock that Iceberg can use information formats this kind of as Parquet, ORC … will compress and shop information. Iceberg’s table storage requires up only about one/five of the area of other datastores The storage dimension of the identical table in the 3 databases is as follows:

Note: The over exams are examples we have encountered for the duration of real manufacturing and are for reference only.

four.four. Effect improve

The effectiveness check reviews gave us adequate effectiveness that it took our workforce about two months to comprehensive the migration, and right here is a diagram of our architecture immediately after the improve.

- Many personal computer resources suit our diverse desires.

- Trino supports DBT and can query Iceberg straight, so we no longer have to deal with information synchronization.

- Trino + Iceberg’s great effectiveness will allow us to open all Copper information (raw information) to our customers.

five. Summary

Since its launch in August 2021, the Footprint Analytics workforce has finished 3 architecture upgrades in much less than a yr and a half, driven by a solid want and determination to supply the gains of the underlying technologies. ideal information for crypto customers and firmly enforce the implementation and improve of its underlying architecture and infrastructure.

The Footprint Analytics three. architecture improve has brought a new consumer knowledge, enabling customers from diverse backgrounds to acquire additional varied utilization and application insights:

- Built working with the Metabase BI engine, Footprint permits analysts with accessibility to deciphered, on-chain information to examine with comprehensive freedom of option of resources (no coding or hardwires) , query the complete historical past, and cross-test tuples for a much better knowing of no time.

- Integrate the two on-chain and off-chain information for net analysis2 + planet broad web3

- By constructing/querying metrics on the basis of Footprint’s business enterprise abstraction, analysts or developers conserve time on 80% of repetitive information processing and emphasis on Indicators, study and meaningful solution options based mostly on their business enterprise.

- Seamless knowledge from Footprint Web to Rest API calls, all SQL-based mostly

- Real-time alerts and actionable notifications for essential signals to help investment choices

[ad_2]